3D object detection is a critical task for autonomous driving. Many important areas of autonomous driving, such as prediction, planning, and motion control, often require a perfect representation of the 3D space around the ego vehicle.

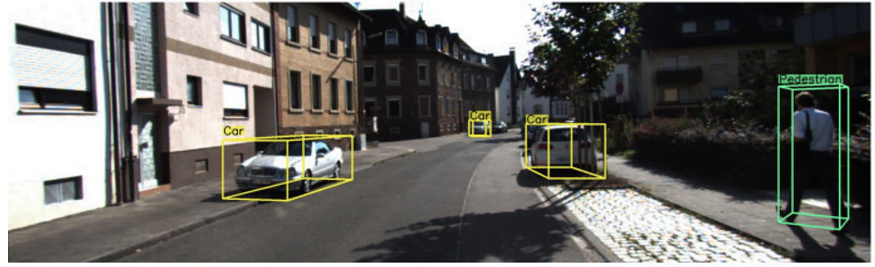

Monocular 3D Object Detection draws a 3D bounding box on an RGB image

In recent years, researchers have been exploiting high-precision LiDAR point clouds for accurate 3D object detection (notably the pioneering work on PointNet showing how to directly manipulate point clouds with neural networks). However, lidar has its drawbacks, such as high cost and sensitivity to adverse weather conditions. The ability to perform monocular 3D object detection using an RGB camera also increases module redundancy in case other, more expensive modules fail. Therefore, how to use only one or more RGB images for reliable and accurate 3D perception is still the magic weapon for autonomous driving perception

How to promote 2D task to 3D task?

Detecting 3D objects from 2D images is a challenging task. It is fundamentally ill-posed because key information in the depth dimension is compressed during the formation of the 2D image. However, this task remains tractable under certain conditions and with strong prior information. Especially in autonomous driving, most objects of interest, such as vehicles, are rigid objects with known geometry, so 3D vehicle information can be recovered using monocular images.

- Representation transformation (BEV, pseudo-lidar)

The cameras are often mounted on the roof of some prototype self-driving cars, or behind rearview mirrors like normal dash cams. Therefore, camera images usually have perspective views of the world. This view is easy for human drivers to understand because it is similar to what we see while driving, but presents two challenges for computer vision: occlusion due to distance and scale change.

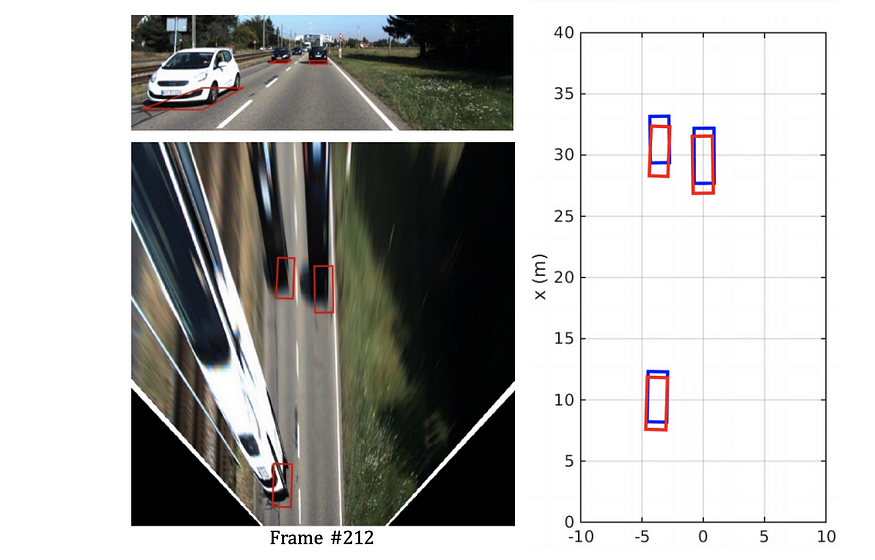

One way to alleviate this is to convert perspective images into Birds-eye-view (BEV). In BEV, cars have the same size, a constant distance from the ego vehicle, and different vehicles do not overlap (given the reasonable assumption that no car is above other cars in the 3D world under normal driving conditions). Inverse perspective mapping (IPM) is a common technique for generating BEV images, but it assumes that all pixels are on the ground and that accurate online exterior (and interior) information is known to the camera. However, the external parameters require online calibration to be accurate enough for IPM.

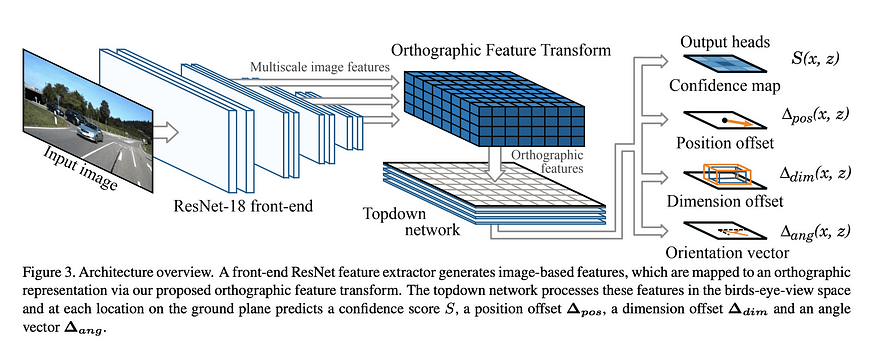

Orthographic Feature Transform (OFT) (BMVC) This is another way to promote perspective views to BEV, but through a deep learning framework. The idea is to use an Orthogonal Feature Transform (OFT) to map perspective image-based features into an orthographic bird’s-eye view map. ResNet-18 is used to extract perspective image features. Voxel-based features are then generated by accumulating image-based features over projected voxel regions. The voxel features are then folded along the vertical dimension to produce orthogonal ground plane features. Finally, another ResNet-like top-down network is used to infer and refine the BEV map.

The idea of OFT is really simple, interesting and effective. Although the backprojection step can be improved by better initializing the voxel-based features using some heuristics instead of doing it naively. For example, image features in a very large bbox cannot correspond to very distant objects. Another issue I have with this approach is accurate extrinsic assumptions, which may not be available online.

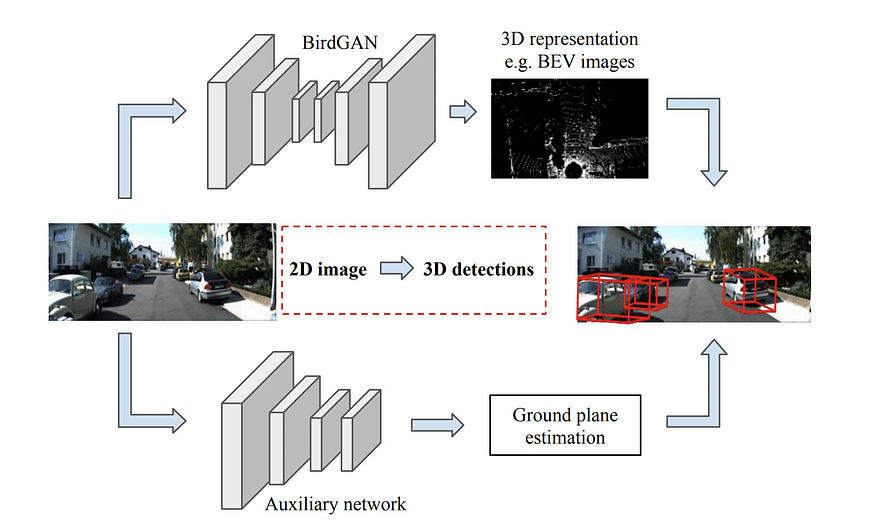

Another approach is BirdGAN (IROS), which uses GANs to perform image-to-image translation. The paper achieves good results, but as the paper acknowledges, the conversion to BEV space can only perform well at frontal distances of only 10 to 15 meters, so is of limited use.

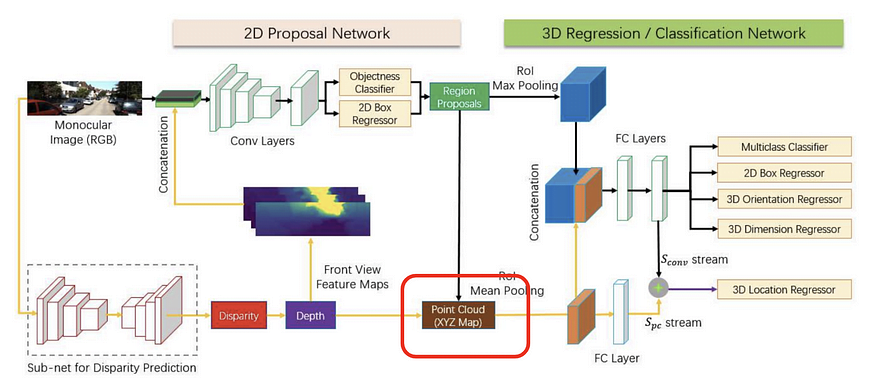

Then enter a bunch of work on the pseudo lidar idea. The idea is to generate a point cloud from the estimated depth of an image, thanks to recent advances in monocular depth estimation (which itself is a hot topic in autonomous driving, which I will review in the future). Previous efforts with RGBD images have primarily treated depth as a fourth channel and applied normal networks to this input with little change to the first layer. Multi-Level Fusion (MLF, CVPR 2018) was the first to propose to upgrade the estimated depth information to 3D. It projects each pixel in an RGB image into 3D space using estimated depth information (via MonoDepth fixed pre-trained weights), and then fuses the resulting point cloud with image features to regress a 3D bounding box.

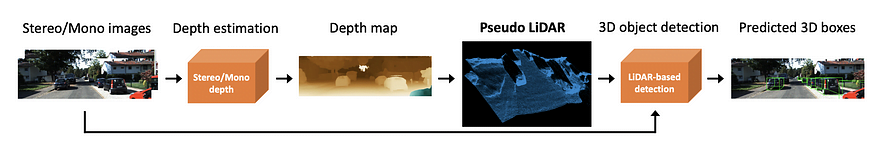

Pseudo-lidar (CVPR 2019) is perhaps the most well-known among this line of work. It is inspired by MLF and uses the generated pseudo-lidar in a more brute-force way, by directly applying state-of-the-art lidar-based 3D object detectors. The authors argue that representation matters, and convolution on depth map does not make sense, as neighboring pixels on depth images may be physically far away in 3D space.

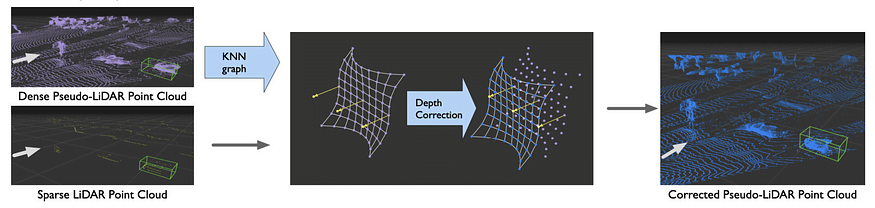

The authors of pseudo-lidar follow up on Pseudo-lidar++. The main improvement is that the generated pseudo-lidar point clouds are now augmented with sparse but accurate measurements from low-cost lidar (though they simulate lidar data). The idea of generating dense 3D representations from camera images and sparse depth measurements is very practical in autonomous driving, and I expect to see more work along this route in the coming years.

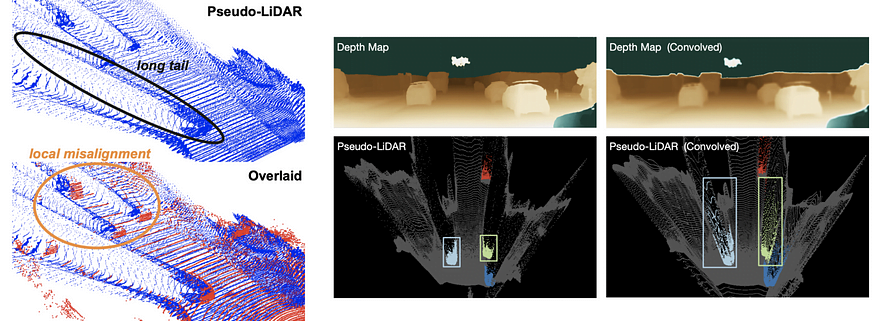

Still others follow and improve upon the original pseudo-LiDAR approach. Pseudo-Lidar Color (CVPR 2019) augments the concept of pseudo-Lidar with ordinary connections (x, y, z) → (x, y, z, r, g, b) or attention-based Gating method to selectively pass rgb information. The paper also uses a simple yet effective method for point cloud segmentation based on concepts from Frustum PointNet (CVPR 2018) and average depth in a frustum. Pseudo-Lidar end2end (ICCV 2019) highlights that there are two bottlenecks for pseudo-Lidar methods: local misalignment caused by inaccuracies in depth estimation and long tails (edge bleeding) caused by depth artifacts at the periphery of objects. They extend the pseudo-lidar work by using instance segmentation masks instead of bboxes in Frustum PointNet, and introduce the idea of a 2D/3D bounding box consistency loss. ForeSeE also notes these shortcomings and emphasizes that not all pixels are equally important in depth estimation. Instead of using off-the-shelf depth estimators like most previous methods, they train new depth estimators, one for the foreground and one for the background, and adaptively fuse the depth maps during inference.

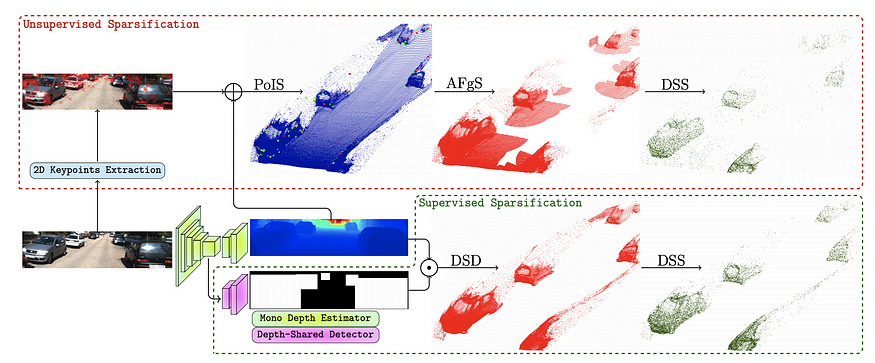

bottlenecks of the pseudo-lidar approach: depth estimation and edge bleeding (source)

RefinedMPL: Refined Monocular PseudoLiDAR for 3D Object Detection in Autonomous Driving digs into the problem of the point density in pseudo-lidar. It noted that the point density is one order of magnitude higher than that point cloud obtained with, say, a 64-line lidar. The excessively more points in the background lead to spurious false positives and cause more computation. The paper proposed a two-step method to perform structured sparsification, first identifying foreground points then perform sparsification. Firstly, foreground points are identified with two proposed approaches, one supervised and one unsupervised. The supervised method trains a 2D object detector and uses the union of 2D bbox mask as the foreground mask to remove the background points. The unsupervised method uses Laplacian of Gaussian (LoG) to perform keypoint detection and uses 2nd order of nearest neighbors as foreground points. Then these foreground points are sparsified uniformly within each depth bins. RefinedMPL found that even with 10% points, the performance of 3D object detection does not drop and actually outperforms the baseline. The paper also attributes to the performance gap between pseudo-lidar to real lidar point cloud to the inaccurate depth estimation.

Unsupervised and supervised sparsification based on pseudo-lidar point cloud in Refined MPL

Overall, I have high hopes for this line of approach. What we need is accurate depth estimation of the foreground, which can be augmented by sparse depth measurements, for example, from low-cost 4-line lidars.

2.Keypoints and Shapes

Vehicles are rigid bodies with unique common parts that can be used as landmarks/keypoints for detection, classification and re-identification. Furthermore, the dimensions of objects of interest (vehicles, pedestrians, etc.) are objects of known size, including overall size and inter-keypoint size. The size information can be effectively utilized to estimate the distance to the ego vehicle.

Most studies along this line extend 2D object detection frameworks (single-stage, like Yolo or RetinaNet, or two-stage, like Faster RCNN) to predict keypoints.

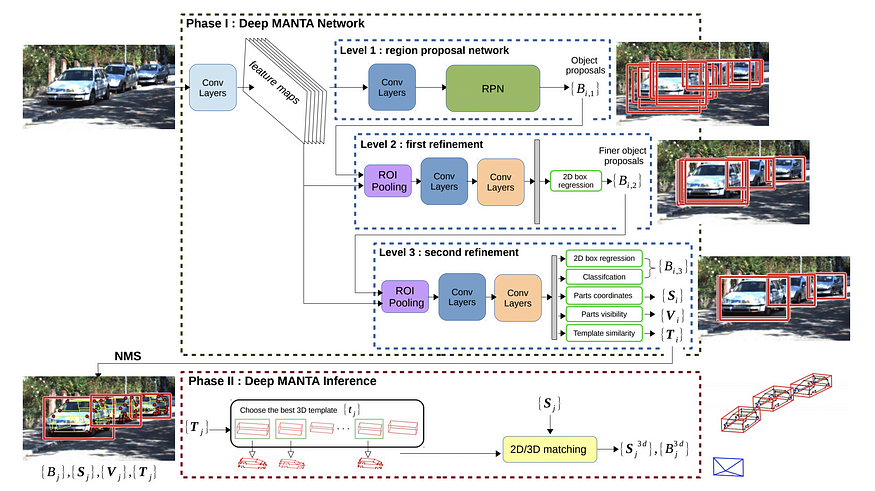

Deep MANTA (CVPR 2017) is a seminal work in this direction. In stage 1 for training and inference, it uses a cascaded Faster RCNN architecture to regress 2d bbox, classification, 2D keypoints, visibility and template similarity. The template is just a (w, h, l) triple representing the 3d bbox. Phase 2 is only used for inference, using template similarity, selects the best matching 3D CAD model, performs 2D/3D matching, and restores 3D position and orientation.

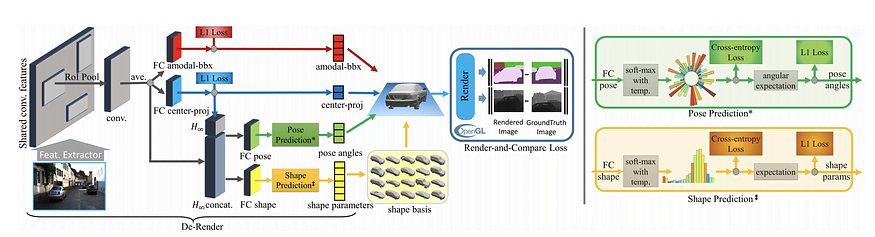

3D-RCNN (CVPR 2018) estimates shape, pose and size parameters of a car and renders (synthesizes) the scene. The mask and depth map are then compared to the ground truth to generate a “render and compare” loss. Principal component analysis (PCA) is used to estimate a low-dimensional (10-d) representation of the shape space. Pose (orientation) and shape parameters are estimated from RoIPooled features and classification-based regression (see [my previous post on multimodal regression](https://towardsdatascience.com/anchors-and-multi-bin-loss- for-multi-modal-target-regression-647ea1974617)). This work requires a lot of input: 2D bbox, 3D bbox, 3D CAD model, 2D instance segmentation and intrinsic functions. Also, the OpenGL based “render and compare” loss seems to be quite engineered.

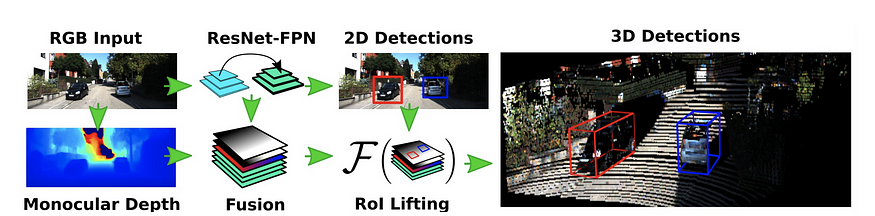

RoI-10D (CVPR 2019) gets its name from the 6DoF pose + 3DoF size of the 3D bounding box. The extra dimension refers to the shape space. Like 3D RCNN, RoI-10D learns a low-dimensional (6-d) representation of shape space, but using a 3D autoencoder. Features from RGB are augmented with estimated depth information and then regressed by RoIPooled with rotation q (quaternion), RoI-relative 2D centroid (x, y), depth z, and metric range (w, h, l). From these parameters, 8 vertices of a 3D bounding box can be estimated. An angular loss can be formulated between all eight predicted vertices and ground truth vertices. Offline labeling of shape ground truth based on KITTI3D by minimizing the reprojection loss. The use of shapes is also quite engineered.

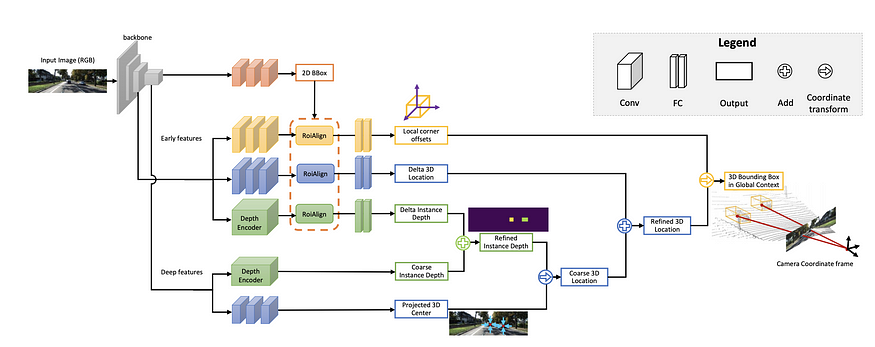

MonoGRNet (AAAI 2019) regresses projections of 3D center and coarse instance depth, and uses both to estimate coarse 3D positions. It emphasizes the distinction between the projection of a 2D bbox center and a 3D bbox center in a 2D image. The projected 3D center can be seen as an artificial keypoint, similar to GPP. Unlike many other methods, it does not regress the relatively easy viewing angle, but directly regresses the offset of the 8 vertices relative to the 3D center.

Ground Plane Polling (GPP) generates virtual 2D keypoints with 3D bbox annotations. It purposefully predicts more attributes than are required to estimate the 3D bbox (overdetermined), and uses these predictions to form the largest consensus attribute set in a way similar to RANSAC, making it more robust to outliers.

GPP predicts the footprint of the 3 tires and the vertical back/front panel edge closest to the ego vehicle (source)

RTM3D (real-time mono-3D) also uses virtual keypoints and uses a CenterNet-like structure to directly detect the 2d projections of all 8 cuboid vertices + cuboid center. The paper also directly regresses distance, direction, and size. Instead of using these values directly to form cuboids, they are used as initial values (priors) to initialize the offline optimizer to generate 3D bboxes. It claims to be the first real-time monocular 3D object detection algorithm (0.055 sec/frame).

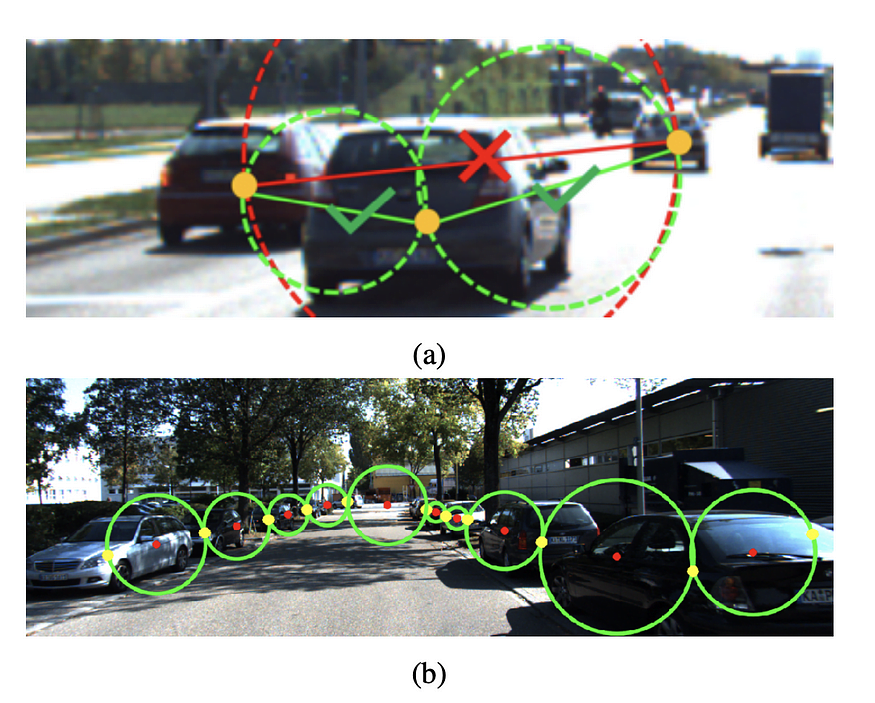

MonoPair draws a lot of inspiration from CenterNet and focuses on improving detection results based on the spatial relationship between car pairs. It not only directly detects 3D bboxes like in CenterNet, but also predicts virtual pairwise constrained keypoints. Pairwise keypoints are defined as the midpoints of any two objects (if they are nearest neighbors). This definition of “relational keypoints” is similar to that in Pixels to Graphics (NIPS 2017). The 3D global optimization Monopair idea states that incorporating uncertainty during depth estimation leads to the largest performance gains.

Pairwise matching strategy for training and inference in MonoPair

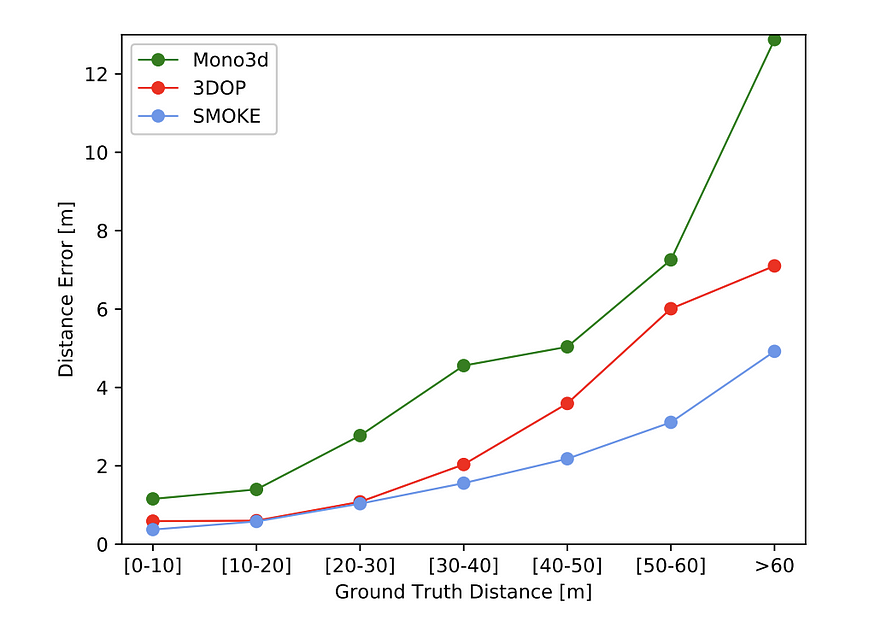

SMOKE (Single-Stage Monocular 3D Object Detection via Keypoint Estimation, CVPRW 2020) is also inspired by CenterNet. It simply removes the regression of 2D bbox and directly predicts 3D bbox. It encodes the 3D bounding box as a point at the projection of the center of the 3D cuboid, with other parameters (size, distance, yaw) as its additional attributes. The loss is a 3D corner loss optimized using a disentangled L1 loss inspired by MonoDIS. This type of loss formulation, as opposed to predicting 7DoF parameters via a weighted sum of multiple loss functions, is an implicit way to weight different loss terms together according to their contribution to the 3D bounding box prediction. It also achieved a range prediction error of less than 5% within 60 meters.

Average depth estimation error by SMOKE

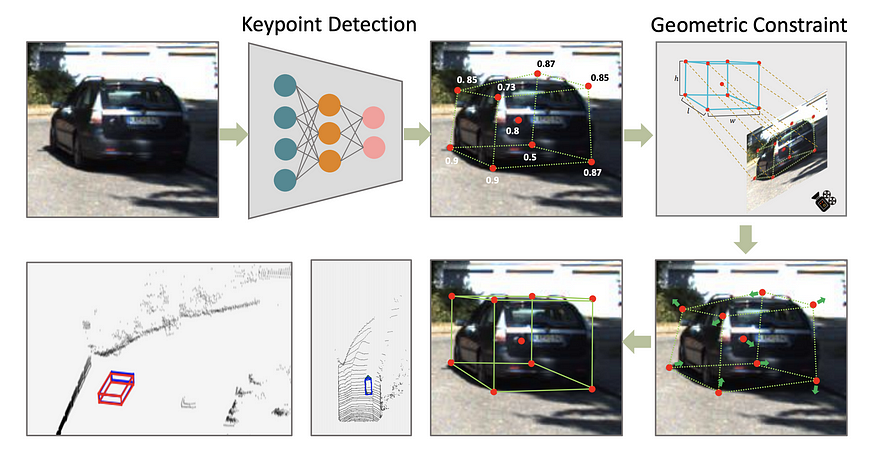

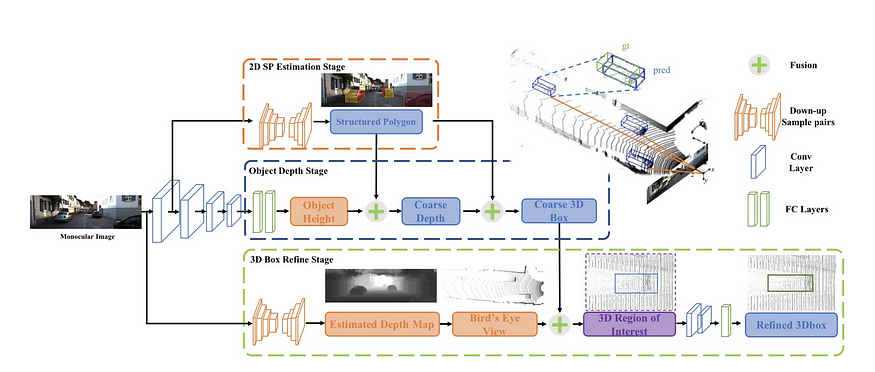

Monocular 3D Object Detection with Decoupled Structured Polygon Estimation and Height-Guided Depth Estimation (AAAI 2020) is the first work to clearly state that the estimation of the 2D projection of the 3D vertices (referred to as the Structured Polygon in the paper) is totally decoupled from the depth estimation. It uses a similar method as RTM3D to regress the eight projected points of the cuboid, then uses vertical edge height as a strong prior to guide distance estimation. This generates a coarse 3D cuboid. Then this 3D cuboid is used as a seed position in a BEV image (generated using a similar method to Pseudo-Lidar) for finetuning. This leads to better results than monocular Pseudo-Lidar.

Decoupled Structure Polygon uses cuboid keypoint regression and pseudo lidar for fine-tuning.

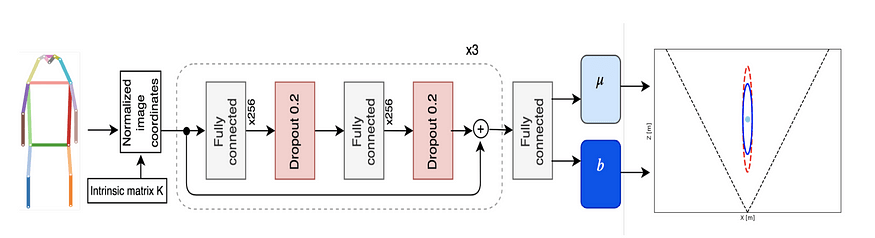

Monoloco (ICCV) is a bit different from the above as it focuses on regressing the position of pedestrians, which is arguably more challenging than 3D detection of vehicles since pedestrians are not rigid bodies and have various poses and deformations. It uses keypoint detectors (top-down Mask RCNN or bottom-up Pif-Paf) to extract keypoints of human keypoints. As a baseline, it utilizes the relatively fixed height of pedestrians, especially the shoulder-to-hip portion (~50cm) to infer depth, which is very similar to what MonoGRNet V2 and GS3D do. The paper uses a multi-layer perceptron (a fully connected neural network) to regress the depth of all keypoint segment lengths and demonstrates improvements over a simple baseline. The paper also enables realistic prediction of uncertainty through modeling of arbitrary/cognitive uncertainty, which is critical in safety-critical applications such as autonomous driving.

In conclusion, extracting keypoints in 2D images is practical and has the potential to infer 3D information from 3D annotations based on lidar data without direct supervision. However, this approach requires rather tedious annotation of multiple keypoints per object and involves engineering-heavy 3D model manipulation.

3.Distance estimation through 2D/3D constraints

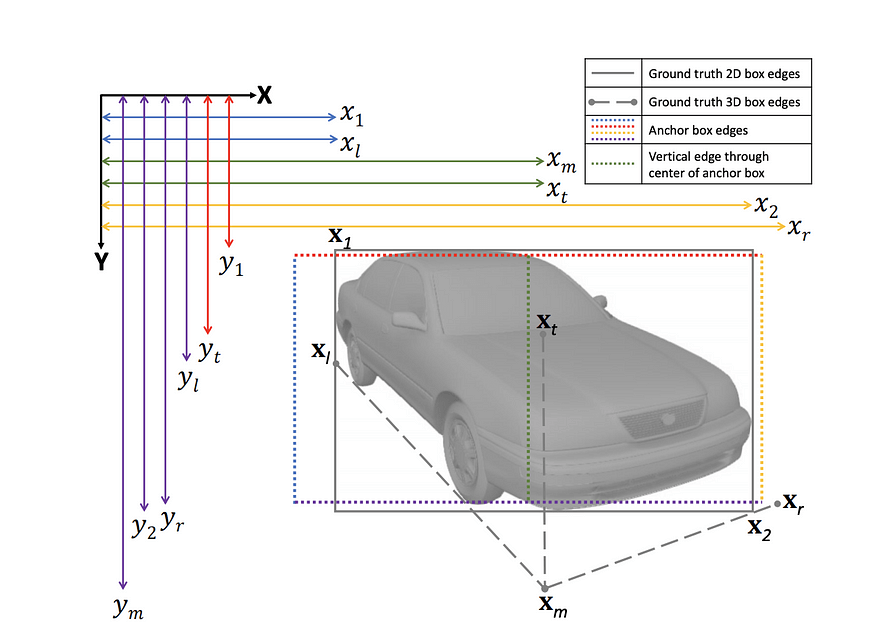

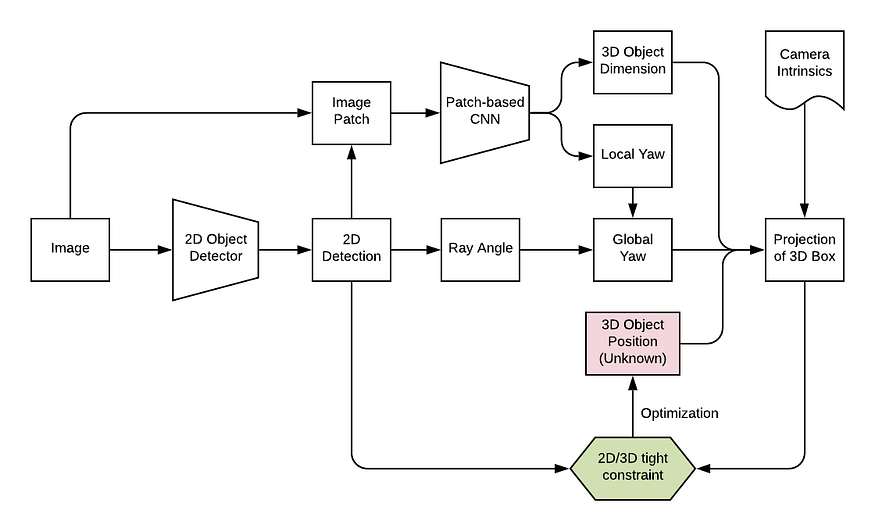

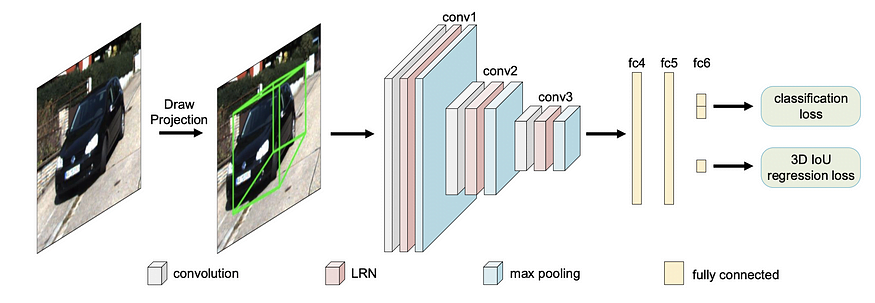

Research in this direction leverages 2D/3D consistency to elevate 2D to 3D. The seminal work is deep3DBox (CVPR 2016). Using these geometric prompts, it solves an overconstrained optimization problem to obtain 3D positions, lifting 2D bounding boxes to 3D.

FQNet (CVPR) extends the idea of deep3dbox beyond skinny. It adds a refinement stage to deep3dbox by densely sampling around 3D seed locations (obtained by strict 2D/3D constraints), and then uses the rendered 3D wireframe to score the 2D patches. However, dense sampling (as in Mono3D which will be discussed later) takes a long time and is not computationally efficient.

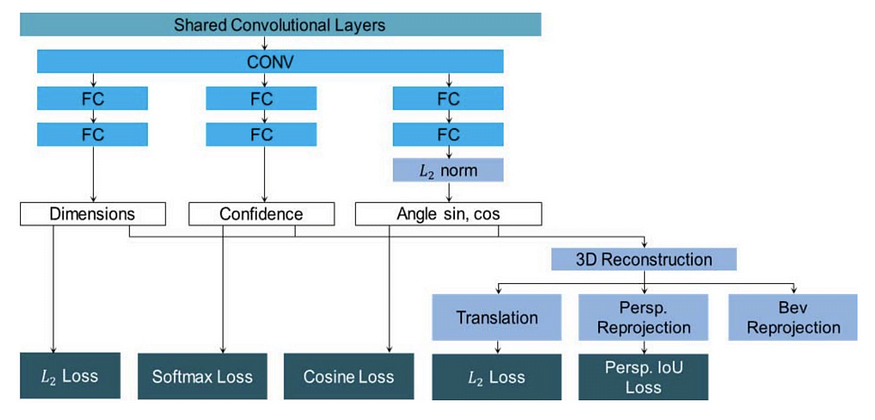

MVRA (Multi-View Reprojection Architecture, ICCV) builds 2D/3D constrained optimization into neural networks and refines cropped cases using an iterative approach. It introduces a 3D reconstruction layer to lift 2D to 3D, instead of solving over-constrained equations, there are two losses in two different spaces: 1) IoU loss in perspective view, and IoU loss in reprojected 3D bbox and IoU between the 2d bbox in , and 2) L2 loss in BEV loss estimated distance and gt distance. It recognizes that deep3DBox does not handle truncated boxes well because the four sides of the bounding box now do not correspond to the real physical extent of the vehicle. This motivates iterative direction optimization with truncated bbox, using only 3 constraints instead of 4, excluding xmin (for left truncation) or xmax (for right truncation) cars. The global yaw is estimated by trial and error in two iterations at intervals of pi/8 and pi/32.

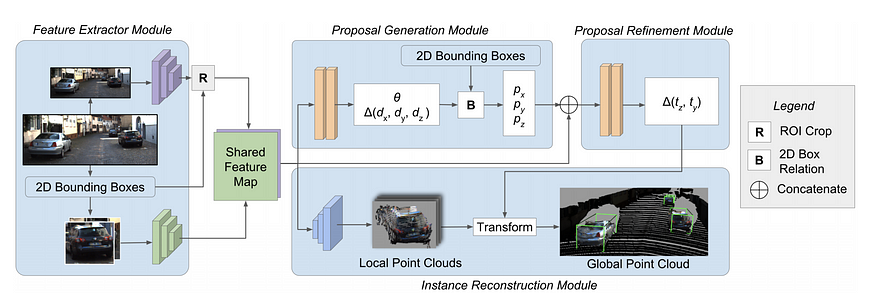

MonoPSR (CVPR 2022) from the same authors of the popular sensor fusion framework AVOD. It first generates 3D proposals and then reconstructs local point clouds of dynamic objects. The centroid proposal stage uses the 2D box height and the regressed 3D object height to infer depth and reprojects the 2D bounding box center to 3D space at the estimated depth. The suggested sage is very practical and very accurate (mean absolute error ~1.5 m). Reconstruct the local point cloud of the branch regression object and compare it to the point cloud and the GT from the camera (after projection). It echoes MonoGRNet and TLNet’s view that the depth of the whole scene is too large for 3D object detection. Instance-centric focus makes the task easier by avoiding regressing to large depth ranges.

4.Direct Generation of 3D proposal

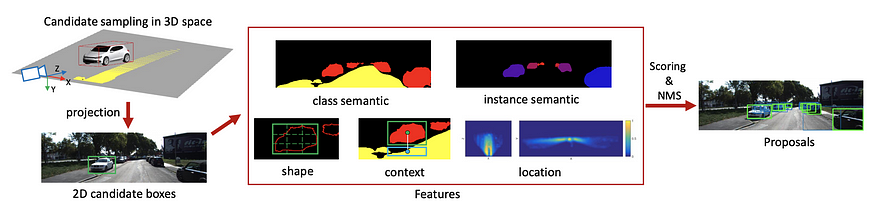

Uber ATG’s Mono3D in CVPR16 is one of the seminal works in the field of monocular 3D object detection. It focuses on direct 3D proposal generation and generates dense proposals based on the fact that the car should be on the ground. It then scores each proposal by many hand-crafted features and performs NMS to obtain the final detection results. In a way, it is similar to FQNet, which scores 3D bbox proposals by examining back-projected wireframes, although FQNet places 3D proposals around Deep3DBox’s initial guess.

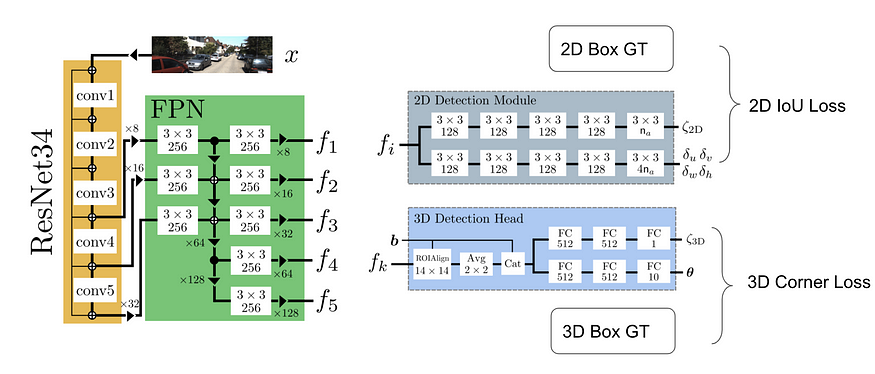

MonoDIS (ICCV 2019) is based on the extended RetinaNet architecture to directly regress 2D bbox and 3D bbox. Instead of directly supervising each component of the 2D and 3D bbox output, it takes the holistic view of bbox regression and uses a 2D (signed) IoU loss and a 3D corner loss. These losses are usually difficult to train, so it proposes a decoupling technique that fixes all elements except one group (comprising one or more elements) as ground truth and computes the loss, essentially only training the parameter. This selective training process rotates until it covers all elements in the prediction and the total loss is accumulated in one forward pass. This split training process enables end-to-end training of 2D/3D bboxes and can be extended to many other applications.

Architecture and losses for MonoDIS (source)

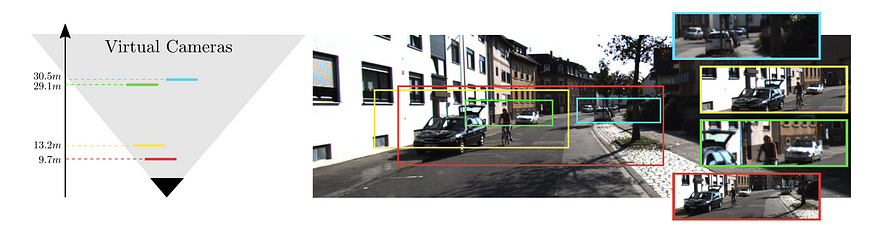

The authors of MonoDIS further improved the algorithm by adopting the idea of Virtual Cameras. The main idea is that the model has to learn different representations for cars at different distances, and the model lack generality for cars beyond the distance range at training. To address a larger distance range, we have to increase the capacity of the model and the accompanying training data. Virtual Camera proposes to break down the entire image into multiple image patches, each containing at least one entire car and with limited depth variation. During inference, a pyramid-like tiling of images are generated for inference. Each tile or strip of the images corresponds to a specific range limit and has varying width and the same height.

CenterNet is a general object detection framework that can be extended to many detection-related tasks, such as keypoint detection, depth estimation, orientation estimation, etc. It first regresses a heatmap indicating the confidence in the location of the object center, and then regresses other object attributes. Directly extend CenterNet to include 2D and 3D object detection as attributes of center points.

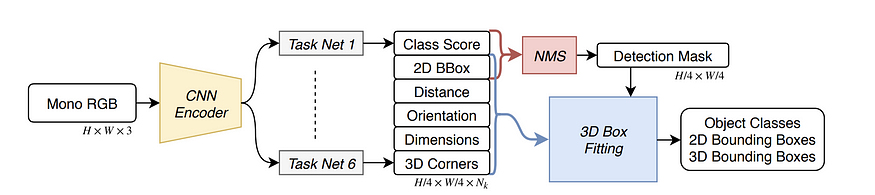

Not so with SS3D. It uses a CenterNet-like structure that first finds the centers of potential objects and then regresses both 2D and 3D bounding boxes. The regression task regresses enough information about 2D and 3D bounding box information for optimization. There are a total of 26 proxy elements in the parameterization of the 2D and 3D bounding box tuples. The total loss is a weighted loss of 26 numbers.

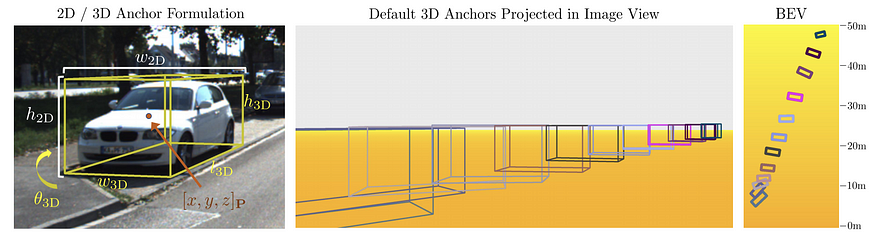

M3D-RPN (ICCV 2019) regresses both 2D and 3D bbox parameters simultaneously by precomputing 3D mean statistics for each 2D anchor. It directly regresses 2D and 3D bounding boxes (11 + num_class), similar to SS3D regressing 26 numbers. The paper proposes an interesting idea of 2D/3D anchor. Essentially they are still 2D anchor points tiled across the entire image, but with 3D bounding box properties. Depending on the location of the 2D anchors, the anchors may have different 3D anchor prior statistics. M3D RPN proposes to use separate convolutional filters (depth-aware convolutions) for different row bins, since depth is largely correlated with rows in autonomous driving scenarios.

D4LCN (CVPR 2020) further borrows the idea of depth-aware convolution from M3D-RPN by introducing a dynamic filter prediction branch. This additional branch takes the depth prediction as input and generates a filter feature quantity, which generates a different filter for each specific position according to the weight and dilation rate. D4LCN also uses the idea of 2D/3D anchors from M3D-RPN and regresses both 2D and 3D boxes (35 + 4 classes per anchor).

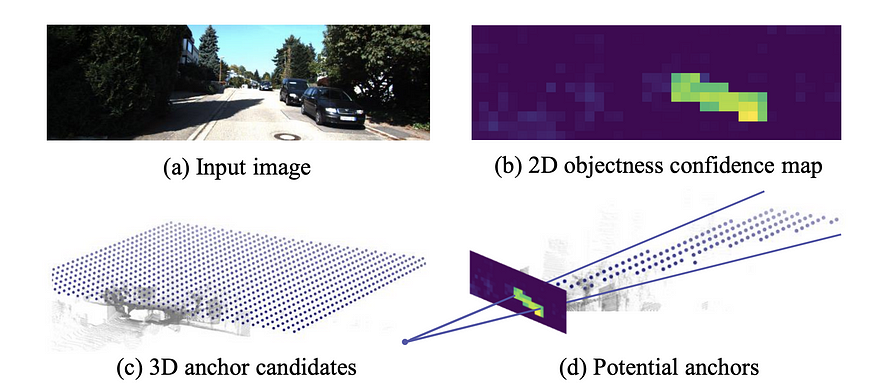

TLNet (CVPR 2019) mainly focuses on stereo image pairs, but they also have a solid monocular baseline. It places a 3D anchor point within the frustum targeted by 2D object detection as a mono baseline. It reiterates MonoGRNet’s point that pixel-level depth maps are too expensive for 3DOD, while object-level depth should be good enough. It mimics Mono3D in that it densely places 3D anchors (0.25m intervals) in the [0, 70m] range, with two orientations (0 and 90 degrees) for each object class, and the average size of the object class. Project the 3D proposal to 2D to get RoI, and the RoIpooled feature is used to regress position offset and dimension offset.

In summary, it is difficult to directly place 3D anchors in 3D space due to the large number of possible locations. An anchor is essentially a sliding window, and detailed scanning of 3D space is tricky. Therefore, directly generating 3D bounding boxes usually uses heuristics or 2D object detection, for example, a car is usually on the ground, and the 3D bounding box of the car is within the frustum of its corresponding 2D bounding box, etc.